I recently had a conversation with the CIO of a well respected company who informed me that his “data center people” had completely mismanaged his data center space which was now causing them to look at having to lease additional capacity or more aggressively pursue virtualization to solve the problem. Furthermore he was powerless to drive and address change as that data center facilities people worked for a different organization. To top it off it frustrated him to no end that in his mind they simply did not understand IT equipment or technologies being deployed. Unfortunately its a common refrain that I hear over and over again. It speaks to the heart of the problems with understanding data center issues in the industry.

How Data Center Capacity Planning Really works!

Data Center managers are by their very nature extremely conservative people. At the root of this conservatism is the understanding that if and when a facility goes down, it is their bottoms on the bottom line. As such, risk takers are very few and far between in this industry. I don’t think I would get much argument from most business-side managers, who would readily agree to that in a heart beat. But before we hang the albatross around the neck of our facilities management brethren lets take a look at some of the challenges they actually have.

Data Center Capacity Planning is a swirling vortex of science, art, best guess-work, and bad information with a sprinkling of cult of personality for taste. One would think that is should be a straight numbers and math game, but its not. First and foremost, the currency of Data Center Capacity Management and Planning is power. Simple right? Well we shall see.

Lets start at a blissful moment in time, when the facility is shiny and new. The floor shines from its first cleaning, the VESDA (Very Early Smoke Detection and Alarm) equipment has not yet begun to throw off false positives, and all is right with the world. The equipment has been fully commissioned and is now ready to address the needs of the business.

Our Data Center Manager is full of hope and optimism. He or she is confident that this time it will be much different than the legacy problems they had to deal with before. They now have the perfect mix of power and cooling to handle any challenge to be thrown at them. They are then approached by their good friends in Information Services with their first requirement. The business has decided to adopt a new application platform which will of course solve all the evils of previous installations.

Its a brand new day, a new beginning. The Data Center Manager asks the IT personnel how many servers are associated with this new deployment. They also ask how much power those servers will draw so that the room can be optimized for this wonderful new solution. The IT personnel may be using consultants, or maybe they are providing the server specifications themselves. In advanced cases they may even have standardized the types and classes of servers they use. How much power? Well, the nameplate on the server says that each of these bit crunching wonders will draw 300watts a piece. As this application is bound to be a huge draw on resources, they inform the facilities team that there are approximately 20 machines at 300watts that are going to be deployed.

The facilities team knows that no machine ever draws its nameplate ratings once ‘in the wilds’ of the data center and therefore for capacity planning purposes they ‘manually’ calculate a 30% reduction into the server deployment numbers. You see, its not that they don’t trust the IT folks, its just that they generally know better. So that nets out to a 90 watt reduction per server bringing the “budgeted power allocation” down to 210 watts per server. This is an important number to keep in mind. You now have two ratings that you have to deal with. Nameplate, and Budgeted. For advanced data center users they may use even more scientific methods of testing to derive their budgeted amount. For example they may run test software on the server designed to drive the machine to 100% CPU utilization, 100% disk utilization, and the like. Interestingly even after these rigorous tests, the machine never gets close to nameplate. Makes you wonder what that rating is even good for, doesn’t it? Our data center manager doesn’t have that level of sophistication, so he is using a 30% reduction. Keep these numbers in mind as we move forward.

The next question is typically are these servers dual or single corded? Essentially will these machines have redundancy built into the power supplies so in the event of a power loss they might still operate through another source of electricity? Well as every good business manager, IT professional, and data center manager knows – This is a good thing. Sure lets make them double corded.

The data center manager, now well armed with information begins building out the racks, pulls the power whips from diverse PDU (power distribution units) to the location of those racks to ensure that the wonders of dual cording can come to full effect.

The servers arrive, they are installed and in a matter of days the new application is humming along just fine, running into all kinds of user adoption issues, unexpected hick-ups, budget over-runs, etc. Okay maybe I am being a bit sarcastic and jaded there but I think it works for many installations. All in all a successful project right? I say sure. But do all parties agree today? tomorrow? 3 years from now?

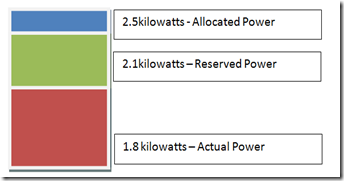

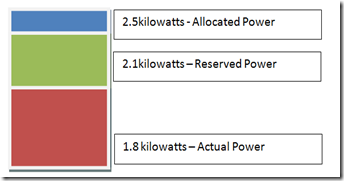

Lets break this down a bit more on the data center side. The data center manager has to allocate power out for the deployment. He has already de-rated the server draw but there is a certain minimum amount of infrastructure he has to deploy regardless. The power being pulled from those PDUs are taking up valuable slots inside that equipment. Think of your stereo equipment at home, there are only so many speakers you can connect to your base unit no matter how loud you want it to get. The data center manager had to make certain decisions based upon the rack configuration. If we believe that they can squeeze 10 of these new servers into a rack, the data center manager has pulled enough capacity to address 2.1 kilowatts per rack (210watts*10 servers). With twenty total servers that means he has two racks of 2.1kilowatts of base load. Sounds easy right? Its just math. And Mike – you said it was harder than regular math. You lied. Did I? Well it turns out that physics is physics and as Scotty from the Enterprise taught us, “You cannot change the laws of Physics, Jim!” Its likely that the power capacity being allocated to the rack might actually be a bit over the 2.1kilowatts due to the nature of what sized circuits might be required. For example he or she may have only needed enough power for 32 amps of power, but because of those pesky connections he had to pull two 20 amp circuits. Lets say for the sake of argument that in this case he has to reserve 2.5 kilowatts as a function of the physical infrastructure requirements. You start to see a little waste right? Its a little more than one servers expected draw, so you might think its not terribly bad. As a business manager, your frustrated with that waste but you might be ok with it. Especially since its a new facility and you have plenty of capacity.

But wait! Remember that dual cording thing? Now you have to double the power you are setting aside. You have to ensure that you have enough power to ensure you can maintain the servers. Usually this is from another PDU so that you can survive a single PDU failure. Additionally you need to reserve that each side (each cord) has enough power to failover. In some cases the total load of the server is divided between the two power supplies, in some cases, power is drawn from the primary with a small trickle of draw from the redundant connection. If the load is divided between both power supplies you are effectively drawing HALF of the total reserved power. If its the situation where they draw full load off one, and have a trickle draw off the second power supply, you are actually drawing the correct amount on one leg, and dramatically less than HALF on the second. Either way the power is allocated and reserved and I bet its more than you thought when we started out this crazy story. Well hold tight, because its going to get even more complicated in a bit.

Right now, any data center manager in the world is reading this and screaming at the remedial nature of this post. This is Facilities Management 101 right after the seminar entitled ‘The EPO button is rarely your friend’ . In fact, I am probably insulting their intelligence because there are even more subtleties than what I have outlined here. But my dear facilities comrade, its not to you I am writing this section to. Its the business management and IT folks. With physics being its pesky self combined with some business decisions, you are effectively taking more power down that you initially thought. Additionally you now have a tax on future capacity as that load associated with physics and redundancy is forever in reserve. Not to be touched without great efforts if at all.

Dual Cording is not bad. Redundancy is not bad. Its a business risk, and that’s something you can understand, and in fact, as a business manager its something I would be willing to bet you do every day in your job. You are weighing the impact of the outage of the business to actual cost. One can even easily calculate the cost of such a decision by taking proportional allocations of your capital cost from an infrastructure perspective and weigh it against the economic impact of not having certain tools and applications available. Even when this is done and its well understood, there is a strange phenomena of amnesia that sets in and in a few months/years the same business manager may look at the facility and give the facilities person a hard time for not utilizing all the power. To Data Center Managers – Having sat as a Data Center Professional for many years – I’m sad to say, you can expect to have this “Reserved” power conversations multiple times with your manager over and over again, especially when things get tight in terms of capacity left. To business managers, book mark this post and read it about every 6 months or so.

Taking it up a notch for a moment…

That last section introduced the concept of Reserved Power. Reserved Power is a concept that sits at the Facility level of Capacity Planning. When a data center hall is first built out there are three terms and concepts you need to know. The first is Critical load (sometimes called IT load). This is the power available to IT and computer equipment in your facility. The second is called Non-Critical load, which has to do with the amount of power allocated to things like lighting, mechanical systems and your electrical plant, generators, etc. What I commonly call ‘Back of the house’ or ‘Big Iron’. The last term is Total load. Total load is the total amount of power available to the facility and can usually be calculated by adding Critical and Non-Critical loads.

A Facility is born with all three facets. You generally cannot have one without the other. In plan on having a future post called ‘Data Center Metrics for Dummies’ which will explore the interconnection between these. For now lets keep it really simple.

The wonderful facility we have built has a certain amount of IT gear that it will hold. Essentially every server we deploy into the facility will subtract from the total amount of Critical Load available for new deployments. As we deduct the power from the facility we are allocating that capacity out. In our previous example we deployed two racks at 2.5kilowatts (and essentially reserved capacity for two more for redundancy). With those two racks we have allocated enough power for 5 kilowatts of real draw and have reserved 10 kilowatts in total.

Before people get all mad at me, I just want to point out that some people don’t count the dual cording extra because they essentially de-rate the room with the understanding that everything will be dual corded. I’m keeping it simple for people to understand what’s actually happening.

Ok back to the show – As I mentioned those racks would really only draw 2.1kW each at full load (we have essentially stranded 400watts per rack of potential capacity and combined its almost 800 watts per rack). As a business we already knew this but we still have to calculate it out and apply our “Budgeted Power” to the room level. So, across our two racks we have an allocated power of 5 kilowatts, with a budgeted amount of 4.2 kilowatts.

Now here is where our IT friends come into play and make things a bit more difficult. That wonderful application that was going to solve world hunger for us and was going to be such a beefy application from a resources perspective is not living up to its reputation. Instead of driving 100% utilization, its sitting down around 8 percent per box. In fact the estimated world wide server utilization number for servers sits between 5-14%. Most server manufacturers have built their boxes in a way where they draw less power at lower utilizations. Therefore our 210watts per server might be closer to 180watts per server of “Actual Load”. That’s another 30watts per server. So while we have allocated 2.5kilowatts, and reserved 2.1kilowatts, we are only drawing 1.8kilowatts of power. We have two racks, we so double it. So now we are in a situation where we are not using 700watts per rack or 1.4 kilowatts across our two racks. Ouch that’s potentially 28% of power wasted!

The higher IT and applications drive the utilization rate, the lower amount of waste you will have. Virtualization can help here, but its not without its own challenges as we will see.

Big Rocks Little Rocks…

Now luckily, as a breed, data center managers are a smart bunch and they have a various of ways to try and reduce this waste. It goes back to our concept of budgeted or reserved power combined with our “stereo jacks” in the PDU. As long as we have some extra jacks, the Data Center Manager can return back to our two racks and artificially set a lower power budget per rack. This time after metering the power for some time he makes the call to artificially limit the racks allocation to 2 kilowatts – he could go to 1.8kilowatts, but remember he is conservative and wants to still give himself some cushion. He can then deploy new racks or cabinets and pretend that the extra 200 watts to the new racks. He can continue this until he runs out of power or out of slots on the PDU. This is a manual process that is physically managed by the facilities manager. There is an emerging technology called power capping which will allow you to do this in software on a server to server basis which will be hugely impactful in our industry, its just not ready for prime time yet.

This inefficiency in allocation creates strange gaps and holes in data centers. Its a phenomena I call Big Rocks, Little Rocks, and like everything in this post is somehow tied to physics.

In this case it was my freshman year physics class in college. The professor was at the front of the class with a bucket full of good sized rocks. He politely asked if the the bucket was full. The class of course responded in the affirmative. He then took out a smaller bucket of pebbles and poured and rigorously sifted and shook the heck out of the larger bucket until every last pebble was emptied into that bucket with the big rocks. He asked again, “Now is it full?” The class responded once more in the affirmative and he pulled out a bucket of sand. He proceeded to re-perform the sifting and shaking, etc and emptied the sand into the bucket. “Its finally full now right?” The class shook their heads one last time in the affirmative and he produced a small bucket of water and poured it into the bucket as well.

That ‘Is the bucket full exercise’ is a lot like the capacity planning that every data center manager eventually has to get very good at. Those holes in capacity at a rack level or PDU level I spoke out are the spaces for servers and equipment to ultimately fit into. At first its easy to fit in big rocks, then it gets harder and harder. You are ultimately left trying to manage to those small spaces of capacity. Trying to utilize every last bit of energy in the facility.

This can be extremely frustrating to business managers and IT personnel. Lets say you do a great job of informing the company how much capacity you actually have in your facility, if there is no knowledge of our “rocks” problem you can easily get yourself into trouble.

Lets go back for a second to our example facility. Time has now passed and our facility is now nearly full. Out of the 1MW of total capacity we have been very aggressive in managing our holes and still have 100kilowatts of capacity. The IT personnel have a new application that is database intensive that will draw 80 kilowatts and because the facility manager has done a good job of managing his facility, there is every expectation that it will be just fine. Until of course they mention that these servers have to be contiguous and close together for performance or even functionality purposes. The problem of course is that you now have a large rock that you need to try and squeeze into small rock places. It wont work. It may actually even force you to either move other infrastructure around in your facility impacting other applications and services, or cause you to get more data center space.

You see the ‘Is it full exercise’ does not work in reverse. You cannot fill a bucket with water, then sand, then pebbles, then rocks. Again lack of understanding can lead to ill-will or the perception that the data center manager is not doing a good job of managing his facility when in fact they are being very aggressive in that management. Its something the business side and IT side should understand.

Virtualization Promise and Pitfalls…

Virtualization is a topic unto itself that is pretty vast and interesting, but I did want to point out some key things to think about it. As you hopefully saw, server utilization has a huge impact on power draw. The higher the utilization the better performance from a power perspective. Additionally many server manufacturers have certain power ramps built into their equipment where you might see an incrementally large jump in power consumption from 11 percent to 12 percent for example. It has to do with throttling of the power consumption I mentioned above. This is a topic that most facility managers have no experience and knowledge of as it has more to do with server design and performance. If your facility manager is aggressively managing your facility as in the example above and virtualization is introduced, you might find yourself tripping circuits as you drive the utilization higher and it crosses these internal utilization thresholds. HP has a good paper talking about how this works. If you pay particular attention to page 14, The lower line is the throttled processor as a function utilization. The upper line is full speed as a function of utilization and then their dynamic power regulation feature is the one that jumps up to full speed a 60% utilization. This gives the box performance only at high utilizations. Its a feature that is turned on by default in HP Servers. Other manufacturers have similar technologies built into their products as well. Typically your Facilities people would not be reading such things. Therefore its imperative that when considering virtualization and its impacts – it should be something that the IT folks and Data Center managers should work on jointly.

I hope this was at least partially valuable out there and hopefully explained some things that may have been considered black box or arcane data center challenges in your mind. Keep in mind with this series I am trying to educate on all sides the challenges we are facing together.

/Mm

of beans harvested from the field at the ceremony we still had some ways to go before all construction and capacity was ready to go. One of the key missing components was the delivery and installation of a transformer for one of the substations required to bring the facility up to full service. The article denotes that I was upset that the PUD was slow to deliver the capacity. Capacity I would add that was promised along a certain set of timelines and promises and commitments were made and money was exchanged based upon those commitments. As you can see from the article, the money exchanged was not insignificant. If Mr. Culbertson felt that I was a bit arrogant in demanding a follow through on promises and commitments after monies and investments were made in a spirit of true partnership, my response would be ‘Welcome to the real world’. As far as being cooperative, by April the construction had already progressed 15 months since its start. Hardly a surprise, and if it was, perhaps the 11 acre building and large construction machinery driving around town could have been a clue to the sincerity of the investment and timelines. Harsh? Maybe. Have you ever built a house? If so, then you know you need to make sure that the process is tightly managed and controlled to ensure you make the delivery date.

of beans harvested from the field at the ceremony we still had some ways to go before all construction and capacity was ready to go. One of the key missing components was the delivery and installation of a transformer for one of the substations required to bring the facility up to full service. The article denotes that I was upset that the PUD was slow to deliver the capacity. Capacity I would add that was promised along a certain set of timelines and promises and commitments were made and money was exchanged based upon those commitments. As you can see from the article, the money exchanged was not insignificant. If Mr. Culbertson felt that I was a bit arrogant in demanding a follow through on promises and commitments after monies and investments were made in a spirit of true partnership, my response would be ‘Welcome to the real world’. As far as being cooperative, by April the construction had already progressed 15 months since its start. Hardly a surprise, and if it was, perhaps the 11 acre building and large construction machinery driving around town could have been a clue to the sincerity of the investment and timelines. Harsh? Maybe. Have you ever built a house? If so, then you know you need to make sure that the process is tightly managed and controlled to ensure you make the delivery date.