In my current role (and given my past) I often get asked about the concept of Data Center Containers by many looking at this unique technology application to see if its right for them. In many respects we are still in the early days of this technology approach and any answers one gives definitely has a variable shelf life given the amount of attention the manufacturers and the industry is giving this technology set. Still, I thought it might be useful to try and jot down a few key things to think about when looking at data center containers and modularized solutions out there today.

I will do my best to try and balance this view across four different axis the Technology, Real Estate, Financial and Operational Considerations. A sort of ‘ Executives View’ of this technology. I do this because containers as a technology can not and should not be looked at from a technology perspective alone. To do so is complete folly and you are asking for some very costly problems down the road if you ignore the other factors. Many love to focus on the interesting technology characteristics or the benefits in efficiency that this technology can bring to bare for an organization but to implement this technology (like any technology really) you need to have a holistic view of the problem you are really trying to solve.

So before we get into containers specifically lets take a quick look as to why containers have come about.

The Sad Story of Moore’s Orphan

In technology circles, Moore’s law has come to be applied to a number of different technology advancement and growth trends and has come to represent exponential growth curves. The original Moore’s law was actually an extrapolation and forward looking observation based on the fact that ‘the number of transistors per square inch on integrated circuits had doubled every year since the integrated circuit was invented.’ As my good friend and long time Intel Technical Fellow now with Microsoft, Dileep Bhandarkar routinely states – Moore has now been credited for inventing the exponential. Its a fruitless battle so we may as well succumb to the tide.

If we look at the technology trends across all areas of Information Technology, whether it be processors, storage, memory, or whatever, the trend has clearly fallen into this exponential pattern in terms of numbers of instructions, amount of storage or memory, network bandwidth, or even tape technology its clear that the movement of Technology has been marching ahead at a staggering pace over the last 20 years. Isn’t it interesting then that places where all of this wondrous growth and technological wizardry has manifested itself, the data center or computer room, or data hall has been moving along at a near pseudo-evolutionary standstill. In fact if one truly looks at the technologies present in most modern data center design they would ultimately find small differences from the very first special purpose data room built by IBM over 40 years ago.

Data Centers themselves have a corollary to the beginning of the industrial revolution. In fact I am positive that Moore’s observations would hold true as civilization transitioned from an agricultural based economy to that of an industrialized one. In fact one might say that the current modularization approach to data centers is really just the industrialization of the data center itself.

In the past, each and every data center was built lovingly by hand by a team of master craftsmen and data center artisans. Each is a one of a kind tool built to solve a set of problems. Think of the eco-system that has developed around building these modern day castles. Architects, Engineering firms, construction firms, specialized mechanical industries, and a host of others that all come together to create each and every masterpiece. So to, did those who built plows, and hammers, clocks and sextants, and the tools of the previous era specialize in making each item, one by one. That is, of course, until the industrial revolution.

The data center modularization movement is not limited to containers and there is some incredibly ingenious stuff happening in this space out there today outside of containers, but one can easily see the industrial benefits of mass producing such technology. This approach simply creates more value, reduces cost and complexity, makes technology cheaper and simplifies the whole. No longer are companies limited to working with the arcane forces of data center design and construction, many of these components are being pre-packaged, pre-manufactured and becoming more aggregated. Reducing the complexity of the past.

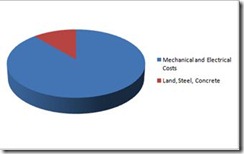

And why shouldn’t it? Data Centers live at the intersection of Information and Real Estate. They are more like machines than buildings but share common elements of both buildings and technology. All one has to do is look at it from a financial perspective to see how true this is. In terms of construction, the cost of data centers break down to the following simple format. Roughly 85% of the total costs to build the facility is made up of the components, labor, and technology to deal with the distribution or cooling of the electrical consumption.

This of course leaves roughly 15% of the costs relegated to land, steel, concrete, bushes, and more of the traditional real estate components of the build. Obviously these percentages differ market to market but on the whole they are close enough for one to get the general idea. It also raises an interesting question as to what is the big drive for higher density in data centers, but that is a post for another day.

As a result of this incredible growth there has been an explosion, a Renaissance if you will, in Data Center Design and approach and the modularization effort is leading the way in causing people to think differently about the data centers themselves. Its a wonderful time to be part of this industry. Some claim that the drivers of this change are being driven by the technology. Others claim that the drivers behind this change have to do with the tough economic times and are more financial. The true answer (as in all things) is that its a bit of both plus some additional factors.

Driving at the intersection of IT Lane and Building Boulevard

From the perspective of the technology drivers behind this change  is the fact that most existing data centers are not designed or instrumented to handle the demands of the changing technology requirements occurring within the data center today.

is the fact that most existing data centers are not designed or instrumented to handle the demands of the changing technology requirements occurring within the data center today.

Data Center managers are being faced with increasingly varied redundancy and resiliency requirements within the footprints that they manage. They continue to support environments that heavily rely upon the infrastructure to provide robust reliability to ensure that key applications do not fail. But applications are changing. Increasingly there are applications that do not require the same level of infrastructure to be deployed because either the application is built in such a way that it is more geo-diverse or server-diverse. Perhaps the internal business units have deployed some test servers or lab / R&D environments that do not need this level of infrastructure. With the amount of RFPs out there demanding more diversity from software and application developers to solve the redundancy issue in software rather than large capital spend requirements on behalf of the enterprise, this is a trend likely to continue for some time. Regardless the reason for the variability challenge that data center managers are facing, the truth is they are greater than ever before.

Traditional data center design cannot achieve these needs without additional waste or significant additional expenditure. Compounding this is the ever increasing requirements for higher power density and resulting cooling requirements. This is complicated by the fact that there is no uniformity of load across most data centers. You have certain racks or areas driving incredible power consumption requiring significant density and other environments, perhaps legacy, perhaps under-utilized which run considerably less dense. In a single room you could see rack power densities vary by as much as 8kw per rack! You might have a bunch of racks drawing 4kw/rack and an area drawing 12kw per rack or even denser. This could consume valuable data center resources and make data center planning very difficult.

Additionally looming on the horizon is the spectre or opportunity of commodity cloud services which might offer additional resources which could significantly change the requirements of your data center design or need for specific requirements. This is generally an unknown at this point, but my money is that the cloud could significantly impact not only what you build, but how you build it. This ultimately drives a modularized approach to the fore.

From a business / finance perspective companies are faced with some interesting challenges as well. The first is that the global inventory for data center space (from a leasing or purchase perspective) is sparse at best. This is resulting from a glut of capacity after the dotcom era and the resulting land grab that occurred after 9/11 and the Finance industry chewing up much of the good inventory. Additive to this is the fact that there is a real reluctance to build these costly facilities speculatively. This is a combination of how the market was burned in the dotcom days, and the general lack of availability and access to large sums of capital. Both of these factors are driving data center space to be a tight resource.

In my opinion the biggest problem across every company I have encountered is that of capacity planning. Most organizations cannot accurately reflect how much data center capacity they will need in next year let alone 3 or 5 years from now. Its a challenge that I have invested a lot of time trying to solve and its just not that easy. But this lack of predictability exacerbates the problems for most companies. By the time they realize they are running out of capacity or need additional capacity it becomes a time to market problem. Given the inventory challenge I mentioned above this can position a company in a very uncomfortable place. Especially if you take the all-in industry average of building a traditional data center yourself in a timeline somewhere between 106 and 152 weeks.

The high upfront capital costs of a traditional data center build can also be a significant endeavor and business impact event for many companies. The amount of spending associated with the traditional method of construction could cripple a company’s resources and/or force it to focus its resources on something non-core to the business. Data Centers can and do impact the balance sheet. This is a fact that is not lost on the Finance professionals in the organization looking at this type of investment.

With the need for companies to remain agile and allow them to move quickly they are looking for the same flexibility from their infrastructure. An asset like a large data center built to requirements that no longer fit can create a drag on a companies ability to stay responsive as well.

None of this even acknowledges some basic cost factors that are beginning to come into play around the construction itself. The construction industry is already forecasting that for every 8 people retiring in the key trades (mechanical, electrical, pipe-fitting, etc) associated with data centers only one person is replacing them. This will eventually mean higher cost of construction and an increased scarcity in construction resources.

Modularized approaches help all of these issues and challenges and provide the modern data center manager a way to solve for both the technology and business level challenges. It allows you to move to Site Integration versus Site Construction. Let me quickly point out that this is not some new whiz bang technology approach. It has been around in other industries for a long long time.

Enter the Container Data Center

While it is not the only modularized approach, this is the landscape in which the data center container has made its entry.

First and foremost let me say that while I am strong proponent of containment in every aspect, containers can add great value or simply not be a fit at all. They can drive significant cost benefits or end up costing significantly more than traditional space. The key is that you need to understand what problem you are trying to solve and that you have a couple of key questions answered first.

So lets explore some of these things to think about in the current state of Data Center Containers out there today.

What problem are you trying to solve?

The first question to ask yourself when evaluating if containerized data center space would be a fit is figure out which problem you are trying to solve. In the past, the driver for me had more to do with solving deployment related issues. We had moved the base unit of measure from servers to racks of servers ultimately to containers. To put it more in general IT terms, it was a move of deploying tens to hundreds of servers per month, to hundreds and thousands of servers per month, to tens of thousands of servers per month. Some people look at containers as Disaster Recovery or Business Continuity Solutions. Others look at it from the perspective HPC clusters or large uniform batch processing requirements and modeling. You must remember that most vendor container solutions out there today are modeled on hundreds to thousands of servers per “box”. Is this a scale that is even applicable to your environment? If you think its as simple as just dropping a server in place and then deploying servers in as you will, you will have a hard learning curve in the current state of ‘container-world’. It just does not work that way today.

Additionally one has to think about the type of ‘IT Load’ they will place inside of a container. most containers espouse similar or like machines in bulk. Rare to non-existent is the container that can take a multitude of different SKUs in different configurations. Does your use drive uniformity of load or consistent use across a large number of machines? If so, containers might be a good fit, if not, I would argue you are better off in traditional data center space (whether traditionally built or modularly built).

I will assume for purposes of this document that you feel you have a good reason to use this technology application.

Technical things to think about . . .

For purposes of this document I am going to refrain from getting into a discussion or comparison of particular vendors (except in aggregate) and generalizations as I will not endorse any vendor over another in this space. Nor will I get into an in depth discussion around server densities, compute power, storage or other IT-specific comparisons for the containers. I will trust that your organizations have experts or at least people knowledgeable in the areas of which servers/network gear/operating systems and the like you need for your application. There is quite a bit of variety out there to chose from and you are a much better judge of such things for your environments than I. What I will talk about here from a technical perspective is things that you might not be thinking of when it comes to the use of containers.

Standards – What’s In? What’s Out?

One of the first considerations you need to look at when looking at containers is to make sure that your facilities experts do a comprehensive look at the vendors you are looking at in terms of the data center aspects of the container. Why? The answer is simple. There is no set industry standards when it comes to Data Center Containers. This means that each vendor might have their own approach on what goes in, and what stays out of the container. This has some pretty big implications for you as the user. For example, lets take a look at batteries or UPS solutions. Some vendors provide this function in the container itself (for ride through, or other purposes), while others assume this is part of the facility you will be connecting the container in to. How is the UPS/batteries configured in your container? Some configurations might have some interesting harmonics issues that will not work for your specific building configuration. Its best to make sure you have both IT and Facilities people look at the solutions you are choosing jointly and make sure you know what base services you will need to provide to the containers themselves from the building, what the containers will provide, and the like.

This brings up another interesting point you should probably consider. Given the variety of Container configurations and lack of overall industry standard, you might find yourself locked into a specific container manufacturer for the long haul. If ensuring you have multiple vendors is important you will need to ensure that find vendors compatible to a standard that you define or wait until there is an industry standard. Some look to the widely publicized Microsoft C-Blox specification as a potential basis for a standard. This is their internal container specification that many vendors have configurations for, but you need to keep in mind that’s based on Microsoft’s requirements and might not meet yours. Until the Green Grid, ASHRAE, or other such standards body starts looking to drive standards in this space, its probably something to be concerned about. This What’s in/What’s out conversation becomes important in other areas as well. In the section below that talks about Finance Asset Classes and Operational items understanding what is inside has some large implications.

Great Server manufacturers are not necessarily great Data Center Engineers

Related to the previous topic, I would recommend that your facilities people really take a look at the mechanical and electrical distribution configurations of the container manufacturers you are evaluating. The lack of standards leaves a pretty interesting view of interpretation and you may find that the one-line diagrams or configuration of the container itself will not meet your specifications. Just because a firm builds great servers, it does not mean they build great containers. Keep in mind, a data center container is a blending of both IT and infrastructure that might normally be housed in a traditional data center infrastructure. In many cases the actual Data Center componentry and design might be new. Some vendors are quite good, some are not. Its worth doing your homework here.

Certification – Yes, its different than Standards

Another thing you want to look for is whether or not your provider is UL and/or CE certified. Its not enough that the servers/internal hardware are UL or CE listed, I would strongly recommend the container itself has this certification. This is very important as you are essentially talking about a giant metal box that is connected to somewhere between 100kw to 500kw of power. Believe me it is in your best interest to ensure that your solution has been tested and certified. Why? Well a big reason can be found down the yellow brick road.

The Wizard of AHJ or Pay attention to the man behind the curtain…

For those of you who do not know who or what an AHJ is, let me explain. It standards for Authority having Jurisdiction. It may sound really technical but it really breaks down to being the local code inspector of where you wish to deploy your containers. This could be one of the biggest things to pay attention to as your local code inspector could quickly sink your efforts or considerably increase the cost to deploy your container solution from both an operational as well as capital perspective.

Containers are a relatively new technology and more than likely your AHJ will not have any familiarity with how to interpret this technology in the local market. Given the fact that there is not a large sample set for them to reference, their interpretation will be very very important. Its important to ensure you work with your AHJ early on. This is where the UL or CE listing can become important. An AHJ could potentially interpret your container in one of two ways. The first is that of a big giant refrigerator. Its a bad example, but what I mean is a piece of equipment. UL and CE listing on the container itself will help with that interpretation. This should be the correct interpretation ultimately but the AHJ can do what they wish. They might look at the container as a confined work space. They might ask you all sorts of interesting questions like how often will people be going into this to service the equipment, (if there is no UL/CE listing)they might look at the electrical and mechanical installations and distribution and rule that it does not meet local electrical codes for distances between devices etc. Essentially, the AHJ is an all powerful force who could really screw things up for a successful container deployment. Its important to note, that while UL/CE gives you a great edge, your AHJ could still rule against you. If he rules the container as a confined work space for example, you might be required to suit your IT workers up in hazmat/thermal suits in two man teams to change out servers or drives. Funny? That’s a real example and interpretation from an AHJ. Which brings us to the importance the IT configuration and interpretation is for your use of containers.

Containers are a relatively new technology and more than likely your AHJ will not have any familiarity with how to interpret this technology in the local market. Given the fact that there is not a large sample set for them to reference, their interpretation will be very very important. Its important to ensure you work with your AHJ early on. This is where the UL or CE listing can become important. An AHJ could potentially interpret your container in one of two ways. The first is that of a big giant refrigerator. Its a bad example, but what I mean is a piece of equipment. UL and CE listing on the container itself will help with that interpretation. This should be the correct interpretation ultimately but the AHJ can do what they wish. They might look at the container as a confined work space. They might ask you all sorts of interesting questions like how often will people be going into this to service the equipment, (if there is no UL/CE listing)they might look at the electrical and mechanical installations and distribution and rule that it does not meet local electrical codes for distances between devices etc. Essentially, the AHJ is an all powerful force who could really screw things up for a successful container deployment. Its important to note, that while UL/CE gives you a great edge, your AHJ could still rule against you. If he rules the container as a confined work space for example, you might be required to suit your IT workers up in hazmat/thermal suits in two man teams to change out servers or drives. Funny? That’s a real example and interpretation from an AHJ. Which brings us to the importance the IT configuration and interpretation is for your use of containers.

Is IT really ready for this?

As you read this section please keep our Wizard of AHJ in the back of your mind. His influence will still be felt in your IT world, whether your IT folks realize it or not. Containers are really best suited if you have a high degree of automation in your IT function for those services and applications to be run inside them. If you have an extremely ‘high touch’ environment where you do not have the ability to remotely access servers and need physical human beings to do a lot of care and feeding of your server environment, containers are not for you. Just picture, IT folks dressed up like spacemen. It definitely requires that you have a great deal of automation and think through some key items.

Lets first look at your ability to remotely image brand new machines within the container. Perhaps you have this capability through virtualization or perhaps through software provided by your server manufacturer. One thing is a fact, this is an almost must-have technology with containers. Given the fact the a container can come with hundreds to thousands of servers, you really don’t want Edna from IT in a container with DVDs and manually loaded software images. Or worse, the AHJ might be unfavorable to you and you might have to have two people in suits with the DVDs for safety purposes.

container. Perhaps you have this capability through virtualization or perhaps through software provided by your server manufacturer. One thing is a fact, this is an almost must-have technology with containers. Given the fact the a container can come with hundreds to thousands of servers, you really don’t want Edna from IT in a container with DVDs and manually loaded software images. Or worse, the AHJ might be unfavorable to you and you might have to have two people in suits with the DVDs for safety purposes.

So definitely keep in mind that you really need a way to deploy your images from a central image repository in place. Which then leads to the integration with your potential configuration management systems (asset management systems) and network environments.

Configuration Management and Asset Management systems are also essential to a successful deployment so that the right images get to the right boxes. Unless you have a monolithic application this is going to be a key problem to solve. Many solutions in the market today are based upon the server or device ‘ARP’ing out its MAC address and some software layer intercepting that arp correlating that MAC address to some data base to your image repository or configuration management system. Otherwise you may be back to Edna and her DVDs and her AHJ mandated buddy.

Of course the concept of Arp’ing brings up your network configuration. Make sure you put plenty of thought into network connectivity for your container. Will you have one VLAN or multiple VLANs across all your servers? Can your network equipment selected handle the amount of machines inside the container? How your container is configured from a network perspective, and your ability to segment out the servers in a container could be crucial to your success. Everyone always blames the network guys for issues in IT, so its worth having the conversation up front with the Network teams on how they are going to address the connectivity A) to the container and B) inside the container from a distribution perspective.

As long as I have all this IT stuff, Containers are cheaper than traditional DC’s right?

Maybe. This blends a little with the next section specifically around finance things to think about for containers but its really sourced from a technical perspective. Today you purchase containers in terms of total power draw for the container itself. 150kw, 300kw, 500kw and like denominations. This ultimately means that you want to optimize your server environments for the load you are using. Not utilizing the entire power allocation could easily flip the economic benefits of going to containers quickly. I know what your thinking, Mike, this is the same problem you have in a traditional data center so this should really be a push and a non-issue.

The difference here is that you have a higher upfront cost with the containers. Lets say you are deploying 300kw containers as a standard. If you never really drive those containers to 300kw and lets say your average is 100kw you are only getting 33% of the cost benefit. If you then add a second container and drive it to like capacity, you may find your self paying a significant premium for that capacity at a much higher price point that deploying those servers to traditional raised floor space for example. Since we are brushing up on economic and financial aspects lets take a quick look at things to keep an eye on in that space.

Finance Friendly?

Most people have the idea that containers are ultimately cheaper and therefore those Finance guys are going to love them. They may actually be cheaper or they may not, regardless there are other things your Finance teams will definitely want to take a look at.

The first challenge for your finance teams is to figure out how to classify this new asset called a container. If you think about traditional asset classification for IT and data center investments they typically fall into 3 categories from which the rules for depreciation are set. The first is Software, The second is server related infrastructure such as Servers, Hardware, racks, and the like. The last category is the data center components itself. Software investments might be capitalized over anywhere between 1-10 years. Servers and the like typically range from 3-5 years, and data centers components (UPS systems, etc) are depreciated closer to 15-30 years. Containers represent an asset that is really a mixed asset class. The container obviously houses servers that have a useful life (presumably shorter than the container housing itself), the container also contains components that might be found in the data center therefore traditionally having a longer depreciation cycle. Remember our What’s in? What’s out conversation? So your finance teams are going to have to figure out how they deal with a Mixed Asset class technology. There is no easy answer to this. Some Finance systems are set up for this, others are not. An organization could move to treat it in an all or nothing fashion. For example, If the entire container is depreciated over a server life cycle it will dramatically increase the depreciation hit for the business. If you opt to depreciate it over the longer lead time items, then you will need to figure out how to deal with the fact that the servers within will be rotated much more frequently and be accounted for. I don’t have an easy answer to this, but I can tell you one thing. If your Finance folks are not looking at containers along with your facilities and IT folks, they should be. They might have some work to do to accommodate this technology.

Related to this, you might also want to think about Containers from an insurance perspective. How is your insurer looking at containers and how do they allocate cost versus risk for this technology set. Your likely going to have some detailed conversations to bring them up to speed on the technology by and large. You might find they require you to put in additional fire suppression (its a metal box, it something catches on fire inside, it should naturally be contained right?) What about the burning plastics? How is water delivered to the container for cooling, where and how does electrical distribution take place. These are all questions that could adversely affect the cost or operation of your container deployment so make sure you loop them in as well.

Operations and Containers

Another key area to keep in mind is how your operational environments are going to change as a result of the introduction to containers. Lets jump back a second and go back to our Insurance examples. A container could weigh as much as 60,000 pounds (US). That is pretty heavy. Now imagine you accidently smack into a load bearing wall or column as you try to push it into place. That is one area where Operations and Insurance are going to have to work together. Is your company licensed and bonded for moving containers around? Does your area have union regulations that only union personnel are certified and bonded to do that kind of work? Important questions and things you will need to figure out from an Operations perspective.

Going back to our What’s in and What’s out conversation – You will need to ensure that you have the proper maintenance regimen in place to facilitate the success of this technology. Perhaps the stuff inside is part of the contract you have with your container manufacturer. Perhaps its not. What work will need to take place to properly support that environment. If you have batteries in your container – how do you service them? What’s the Wizard of AHJ ruling on that?

The point here is that an evaluation for containers must be multi-faceted. If you only look at this solution from a technology perspective you are creating a very large blind spot for yourself that will likely have significant impact on the success of containers in your environment.

This document is really meant to be the first of an evolutionary watch of the industry as it stands today. I will add observations as I think of them and repost accordingly over time. Likely (and hopefully) many of the challenges and things to think about may get solved over time and I remain a strong proponent of this technology application. The key is that you cannot look at containers purely from a technology perspective. There are a multitude of other factors that will make or break the use of this technology. I hope this post helped answer some questions or at least force you to think a bit more holistically around the use of this interesting and exciting technology.

\Mm

Mike, You out did yourself. Great in-depth article on Containers. Extremly informative for me. Thanks

Clarification: Containers are listed by the IRS as asset claas 00.27, with a 5 year depreciation under MACRS. I am sure that many will have their own views on how their organization should handle depreciation, but the IRS has its opinion on the metal.

Greg,

You are exactly right as far as the metal enclosure component of a data center container. The trick will be whether or not the change of use is significant enough for the IRS to either create a sub-class or another class entirely. Another option could be to keep the Container at 5 years and then individually depreciate the components inside. I guess my primary point here is that your Finance teams should definitely start looking at this as its bit trickier. As the container industry matures this should eventually be solved. Until then, make sure you have a bean-counter involved. (No offense of course 🙂 )

\Mm