First let me start out with the fact that the need for datacenter measurement is paramount if this industry is to be able to manage itself effectively. When I give a talk I usually begin by asking the crowd three basic questions

1) How many in attendance are monitoring and tracking electrical usage?

2) How many in attendance measure datacenter efficiency?

3) How many work for organizations in which the CIO looks at the power bills?

The response to these questions has been abysmally low for years, but I have been delighted by the fact that slowly but surely, the numbers have been rising. Not in great numbers mind you, but incrementing. We are approaching a critical time in the development of the data center industry and where it (and the technologies involved) will go.

To that end there is no doubt that the PUE metric has been instrumental in driving awareness and visibility on the space. The Green Grid really did a great job in pulling this metric together evangelizing it to the industry. Despite a host of other potential metrics out there, PUE has captured the industry given its relatively straight forward approach. But PUE is poised to be a victim of its own success in my opinion unless the industry takes steps to standardizes its use in marketing material and how it is talked about.

Don’t get me wrong, I am rabidly committed to PUE as a metric and as a guiding tool in our industry. In fact I have publicly defended the detractors of this metric for years. So this post is a small plea for sanity.

These days, I view each and every public statement of PUE with a full heaping shovel-full of skepticism regardless of company or perceived leadership position. In my mind measurement of your company’s environment and energy efficiency is a pretty personal experience. I don’t care which metric you use (even if its not PUE) as long as you take a base measurement and consistently measure over time making changes to achieve greater and greater efficiency. There is no magic pill, no technology, no approach that gives you efficiency nirvana. It is a process that involves technology (both high tech and low tech), process, procedure, and old fashioned roll up your sleeves operational best practices over time that gets you there.

With mounting efforts around regulation, internal scrutiny around capital spending, lack of general market inventory and a host of other reasons, the push for efficiency has never been greater and the spotlight on efficiency as a function of “data center product” is in full swing. Increasingly PUE is moving from the data center professional and facilities groups to the marketing department. I view this as bad.

Enter the Marketing Department

In my new role I get visibility to all sorts of interesting things I never got to see in my role managing the infrastructure for a globally ubiquitous cloud roll out. One of the more interesting items was an RFP issued by a local regional government for a data center requirement. The RFP had all the normal things you would expect to find in terms of looking for that kind of thing, but there was a caveat that this facility must have a PUE of 1.2. When questioned around this PUE target, the person in charge stated, if Google and Microsoft are achieving this level we want the same thing and this is becoming the industry standard. Of course the realization in differences in application make up, legacy systems, or the fact that it would have to also house massive tape libraries (read low power density) and a host of other factors made it impossible for them to really achieve this. It was then that I started to get an inkling that PUE was starting to get away from its original intention.

You don’t have to look far to read about the latest company that has broken the new PUE barrier of 1.5 or 1.4 or 1.3 or 1.2 or even 1.1. Its like the space race. Except that the claims of achieving those milestones are never really backed up with real data to prove or disprove it. Its all a bunch of nonsensical bunk. And its in this nonsensical bunk that we will damn ourselves with those who have absolutely no clue about how this stuff actually works. Marketing wants the quick bullet points and a vehicle to allow them to show some kind of technological superiority or green badges of honor. When someone walks up to me at a tradeshow or emails me braggadocios claims of PUE they are unconsciously picking a fight with me and I am always up for the task.

WHICH PUE DO YOU USE?

Lets have an honest, open and frank conversation around this topic shall we? When someone tells me of the latest greatest PUE they have achieved, or have heard about, my first question is ‘Oh yeah? Which PUE are they/you using?’. I love the response I typically get when I ask the question. Eyebrows twist up and a perplexed look takes over their face. Which PUE?

If you think about it, its a valid question. Are they looking at Average Annual PUE? Are they looking at AVERAGE PEAK PUE? Are they looking at design point PUE? Are they looking at Annual Average Calculated PUE? Are they looking at Commissioning state PUE? What is the interval at which they are measuring? Is this the PUE rating they achieved one time at 1:30AM on the coldest night in January?

I sound like an engineer here but there is a vast territory of values between these numbers all of them and none of them may have anything to do with reality. If you will allow me a bit of role-playing here lets walk through a scenario where we (you and I dear reader) are about to build and commission our first facility.

We are building out a 1MW facility with a targeted PUE of 1.5. After successful build out with no problems or hiccups (we are role-playing remember) we begin the commissioning with load banks to simulate load. During the process of commissioning we have a measured PUE our target of 1.40. Congratulations we have beaten our design goal! right? We have crossed the 1.5 barrier! Well maybe not. Lets ask the question…How long did we run the Level 5 commissioning for? There are some vendors who burn it in over a course of 12 hours. Some a full day. Does that 1.40 represent the average of the values collected? Does it measure the averaged peak? Was it the lowest value? What month are we in? Will it be significantly different in July? January? May? Where is the facility located? The scores over time versus at commissioning will vary significantly over time.

A few years back when I was at Microsoft, we publicly released the data below for a mature facility at capacity that has been operating and collecting information four years. We had been tracking PUE or at least the variables used in PUE for that long. You can see in the chart the variations of PUE. Keep in mind this chart shows a very concentrated effort to drive efficiency over time. Even in a mature facility where the load remains mostly constant over time, the PUE has variation and fluctuation. Add to that the deltas between average, peak and average peak. Which numbers are you using?

(source: GreenM3 blog)

Ok lets say we settle on using just average (its always the lowest number PUE with the exception of a one time measurement). We want to look good to management right? If you are a colo company or data center wholesaler you may even give marketing a look-see to see if there is any value in that regard. We are very proud of ourselves. There is much back slapping and glad handing as we send our production model out the door.

Just like an automobile our data center depreciates quickly as soon as the wheels hit the street. Except that with data centers its the PUE that is negatively affected.

The IMPORTANCE OF USE

Our brand new facility is now empty. The load-banks have been removed, we have pristine white floor space ready to go. With little to no IT load in our facility we currently have a PUE somewhere between 7 and 500. Its just math (refer back to how PUE is actually calculated). So now our PUE will be a function of how quickly we consume the capacity. But wait, how can our PUE be so high? We have proof from commissioning that we have created an extremely efficient facility. Its all in the math. Its math marketing people don’t like. It screws with the message. Small revelation here – Data Centers become more “efficient” the more energy they consume! Regulations that take PUE into account will need to worry about this troublesome side effect.

There are lots of interesting things you can do to minimize this extremely high PUE at launch like shutting down CRAH units, removing perf tiles and replacing them with solid tiles, but ultimately your PUE is going to be much much higher regardless.

Now lets take the actual deployment of IT ramps in new data centers. In many cases enterprises build data centers to last them over a long period of time. This means that there is little likelihood that your facility will look close to your commissioning numbers (with load banks installed). Add to the fact that traditional data center construction has you building out all of the capacity from the start. This essentially means that your PUE is not going to have a great story for quite a bit of time. Its also why I am high on the modularized approach. Smaller, more modular units allow you to more efficiently (from cost as well as energy efficiency) grow your facility out.

So if we go back to our marketing friends, our PUE looks nothing like the announcement any more. Future external audits might highlight this, and we may full under scrutiny of falsely advertising our numbers. So lets pretend we are trying to do everything correctly and have projected that we will completely fill our facility in 5 years.

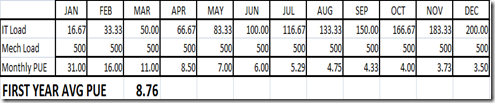

The first year we successfully fill 200kw of load in our facility. We are right on track. Except that the 200kw was likely not deployed all at once. It was deployed over the course of the year. Which means my end of year PUE number may be something like 3.5 but it was much higher earlier in the year. If I take my annual average, it certainly wont be 3.5. It will be much higher. In fact if I equally distribute the 200kw that first year over 12 months, my PUE looks like this:

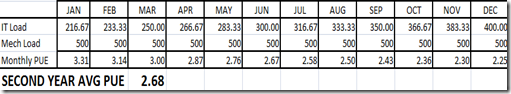

That looks nothing like the PUE we advertised does it? Additionally I am not even counting the variability introduced by time. This is just end of month numbers. So the frequency will have an impact on this number as well. The second year of operation our numbers are still quite poor when compared to our initial numbers.

Again, if I take annual average PUE for the second year of operation, I am not at my design target nor am I at our commissioned PUE rating. So how can firms unequivocally state such wonder PUEs? They cant. Even this extremely simplistic example doesn’t take into effect that load in the data center moves around based upon utilization, it also almost never achieves the power draw you think it will take. There are lots of variables here.

Lets be clear – This is how PUE is supposed to work! There is nothing wrong with these calcs. There is nothing wrong with the high values. It is what it is. The goal is to drive efficiency in your usage. Falsely focusing on extremely low numbers that are the result of highly optimized integration between software and infrastructure and making them the desirable targets will do nothing more than place barriers and obstacles in our way later on. Outsiders looking in want to find simplicity. They want to find the quick and dirty numbers by which to manage the industry by. As engineers you know this is a bit more complex. Marketing efforts and focusing on low PUEs will only damn us later on.

Additionally, if you allow me to put my manager/businessman hat on – there is a law of diminishing return by focusing on lower and lower PUE. The cost for continued integration and optimization starts losing its overall business value and gains in efficiency are offset by the costs to achieve those gains. I speak as someone who drove numbers down into that range. The larger industry would be better served by focusing more on application architecture, machine utilization, virtualization, and like technologies before pushing closer to 1.0.

So what to do?

I fundamentally believe that this would be an easy thing to correct. But its completely dependent upon how strong a role the Green Grid wants to play in this. I feel that the Green Grid has the authority and responsibility to establish guidelines in the formal usage of PUE ratings. I would posit the following ratings with apologies in advance as I am not a marketing guy who could come up with more clever names:

Design Target PUE (DTP) – This is the PUE rating that theoretically the design should be able to achieve. I see too many designs that have never manifested physically. This would be the least “trustworthy” rating until the facility or approach has been built.

Commissioned Witnessed PUE (CWP) – This is the actual PUE witnessed at the time of commissioning of the facility. There is a certainty about this rating as it has actually achieved and witnessed. This would be the rating that most colo providers and wholesalers would need to use as they have little impact or visibility into customer usage.

Annual Average PUE (AAP) – This is what it says it is. However I think that the Green Grid needs to come up with a minimal standard of frequency (my recommendation is at least 3 times a day, data collection) to establish this rating. You also couldn’t publish this number without a full years worth of data.

Annual Average Peak PUE (APP) – My preference would be to use this as its a value that actually matters to the ongoing operation of the facility. When you combine with the Operations challenge of managing power within a facility, you need to account for peaks more carefully especially as you approach the end capacity of the space you are deploying. Again hard frequencies need to be established along with a full years worth of data here as well.

I think this would greatly cut back on ridiculous claims or at least get closer to a “truth in advertising’ position. It would also allow for outside agencies to come in and audit those claims over time. You could easily see extensions to the ISO14k and ISO27K and other audit certifications to test for it. Additionally it gives the outsiders a peak at the complexity at the space and allows for smarter mechanics that drive for greater efficiency (how about a 10% APP reduction target per year instead).

As the Green Grid is a consortium of different companies (some of whom are likely to want to keep the fuzziness around PUE for their own gains) it will be interesting to see if they step into better controlling the monster we have unleashed.

Lets re-claim PUE and Metrics from the Marketing People.

\Mm

Worth mentioning here is tPUE, which James Hamilton is pushing as a better metric than PUE:

http://perspectives.mvdirona.com/2009/06/15/PUEAndTotalPowerUsageEfficiencyTPUE.aspx

Ian –

I am planning another post (a Chiller side Chat actually) on Data Center metrics. James and I go back quite a bit sharing some Microsoft DNA and having lots of great debates internally. tPUE takes PUE to another level of detail. In general people dont get alot of arguments from me on measuring anything. Which method is best? Thats the point of my next post. However – tPUE looks to further define and break out the more “infrastructure” components in the historical IT load category. It does not help the general tight spot we are getting ourselves into by not defining acceptable behavior and best practices when using PUE as a commercially comparable number. In fact, in my experience, marketing folks dont even want to get to the general Total load divided by IT load level. They just want the number. Going to that next level (break out of fan power in the IT load) will likely make their eyes glass over.

But your point is valid and another thing I have wanted to comment on, that is the emergence of multiple measurement methodlogies. Lets put that one on the ‘coming soon’ post list.

\Mm

Michael,

Awesome post (as always)! I really like the container idea and continue to push the concept as well as market the right fit for someone to try it. Do you measure PUE for each container? Average the PUE’s across a container farm? With a spine feeding containers I would think you would have to account for the shared infrastructure, right?

-John

John – Thats exactly one of the interesting things about containers its a nice packaged deal. Your servers are already there (your IT Load) and depending upon which solution you go with there may be additional mechanical, UPS, etc loads in the container itself. Interestingly I think the concept of tPue will be more important to containers long term. You are dead on the mark with your acknowledgement that there will be a tax that you will need to account for from the shared components . But its interesting in that as the market pushes for modularization – containers could ship with PUE ranges closer to the mark. No waiting on IT load to be deployed. Its there in the deployment of capacity. Similarly, modularized approaches would limit your exposure to insane PUE ranges at launch as you would only deploy the capacity you need.

\Mm

Mike,

always enjoy your candid and thoughful insights on the issues that face the industry. We’ve grappled with ways to normalize the measurement of PUE to make sure that we do not run into some of the issues stated above. We have started to center our measurements using kWh rather than kW readings. Choosing measurements at arbitrary times during the day or at set times during the day/week/month has never proved to be accurate. As you described, in a building with multiple tenants with different move-in (ramp-in) schedules, different load profiles and times of utilization (i.e. an online gaming company has a much different load graph than a law office which differs from a social network…)…so there is no good way to normalize the PUE by taking a snapshot of the building load and IT load. By taking the kWh from the utility bills and the kWh recorded on meters above AND below the UPS’ as well as on the mechanical plant you will be able to not only normalize the numbers for PUE, but also be able to compile information that ties back through all systems. This kind of information would allow data center operators to review the efficiencies at a more granular level: are most losses coming from the UPS, the chillers, CRAH units, etc. and can give operators a road map on where they should concentrate efforts on managing efficiency projects.

Mike, very good synopsis of the risk with oversimplification of PUE. If our industry needs to be about efficiency , ie. doing more with less then that’s what we should focus on. PUE has had a transformational effect on the industry’s understanding of what is going on but implying its one single truth , a panacea would be foolhardy. I think about a client of mine who drove a very successful virtualisation and consolidation program, he reduced gross site power consumption by nearly 13% but his PUE increased … did he do a bad job ? I think not, lower PUE doesn’t always imply lower energy consumption….and isn’t lower energy consumption what we’re chasing ?

-Ciaran

Mike,

Nice summary of many of the good and bad factors surrounding the (ab)use of PUE. I like your suggestions for methods of measuring, but I might suggest that each one include the letters PUE to maintain the momentum around the PUE concept (something like dtPUE for Design Target PUE).

It’s like the days before the EPA standardized testing methodology on claimable MPG. I’ve noticed that if I drive my Honda Insight down a long mountain highway with my foot off the gas, I get 150 miles per gallon!!

If I ask vendors about PUE, I ask them for dtPUE’s at several environmental conditions that we are likely to see here in Portland, OR:

A cool, wet winter day – 37 DegF, 100% RH

A dry hot day – 100 DegF, 6% RH

A warm humid day – 72 DegF, 70% RH

This range of conditions can help me understand the range of PUEs we may see in actual operation.

Thanks again for posting relevant and interesting info.

-Dave

Dave,

Great points. I am board with keeping the PUE naming convention I was trying illustrate a more categorical approach. To those unfamiliar with this topic, my fear is that the may come away with the perception that this is a monolothic and well defined metric (along the lines of mph). The MPH analogy is a great one as they had the same issues until a formal definition and set of criteria was established.

Mike

Mike,

I agree totally with you, allthough I do see a few challenges. There are still a lot of datacenters around that only measure the load by hand once a month. Without online measurement and automatic measuring three times a day at the exact same minute, you are unable to collect all this data. In order to compare apples with apples you need to come to a scenario that all datacenters can live up to.

Regards,

Robert

Robert-

You are spot on with your statement that many facilities are still only measuring load by hand. I would argue however that there are other comparative points to consider. If you measure once every day by hand (what I call SneakerNet BMS) at or around the same time, even that daily measurement is enough to improve efficiency over time. You dont need to be overly instrumented to start affecting change. I have always contended that measurement is a personal journey (and therefore NEVER apples to apples). Its all about driving efficiency. Whether motivated by saving money, sating upper mangements desire to be green, or a plain desire to be less wasteful. In this case you can satisfy any or all of the three. To add to your point, there is still a healthy percentage of facilities out there that do not even measure load. Or, if they do, they do nothing with the data.

Mike

Good post for many reasons, but I liked best that you highlighted the “troublesome side effect” that is all too often absent from these discussions: increased efficiency through increased consumption.

I quoted your comments: http://vertatique.com/?q=pue-increased-efficiency-through-increased-consumption

Some interesting points about the wants of marketing and realistic expectations. While I have been on the marketing side for over 20 years, I have never seen a time more demanding in expectations. I appreciated your thoughts.

I like your suggestions for methods of measuring.

Choosing measurements at arbitrary times during the day or times set during the week or month, can be accurate.

However I think an outside agencies should come in and audit those claims over time.

One unexpected side-effect of the spread of PUE, for a colocation operator, was that our customers soon realized that their bills can be used to “guess” the operator’s PUE.

Many colo operators charge their users not only for metered UPS power but also for cooling energy consumption, either as metered chilled water MJ (in case of dedicated CPU rooms) or a percentage of UPS power (often in a shared environment).

The latter will immediately lead to PUE as all the customer need to do is to unitize and then add 1. (if you are charged 80% of UPS power for cooling power, then the operator is working at a PUE of 1.80, and so on) In the case of former, some energy calculations are necessary, but it amounts to (UPS energy + cooling energy) / (UPS energy).

So, if your colo operator claims a PUE of 1.4 for its data center, and yet charge you cooling energy charge amounting to 50% of your UPS power bill, something funny is going on in your colo operator…