Data Centers are a hot topic these days. No matter where you look, this once obscure aspect of infrastructure is getting a lot of attention. For years, there have been cost pressures on IT operations and this, when the need for modern capacity is greater than ever, has thrust data centers into the spotlight. Server and rack density continues to rise, placing DC professionals and businesses in tighter and tougher situations while they struggle to manage their IT environments. And now hyper-scale cloud infrastructure is taking traditional technologies to limits never explored before and focusing the imagination of the IT industry on new possibilities.

At Microsoft, we have focused a lot of thought and research around how to best operate and maintain our global infrastructure and we want to share those learnings. While obviously there are some aspects that we keep to ourselves, we have shared how we operate facilities daily, our technologies and methodologies, and, most importantly, how we monitor and manage our facilities. Whether it’s speaking at industry events, inviting customers to our “Microsoft data center conferences” held in our data centers, or through other media like blogging and white papers, we believe sharing best practices is paramount and will drive the industry forward. So in that vein, we have some interesting news to share.

Today we are sharing our Generation 4 Modular Data Center plan. This is our vision and will be the foundation of our cloud data center infrastructure in the next five years. We believe it is one of the most revolutionary changes to happen to data centers in the last 30 years. Joining me, in writing this blog are Daniel Costello, my director of Data Center Research and Engineering and Christian Belady, principal power and cooling architect. I feel their voices will add significant value to driving understanding around the many benefits included in this new design paradigm.

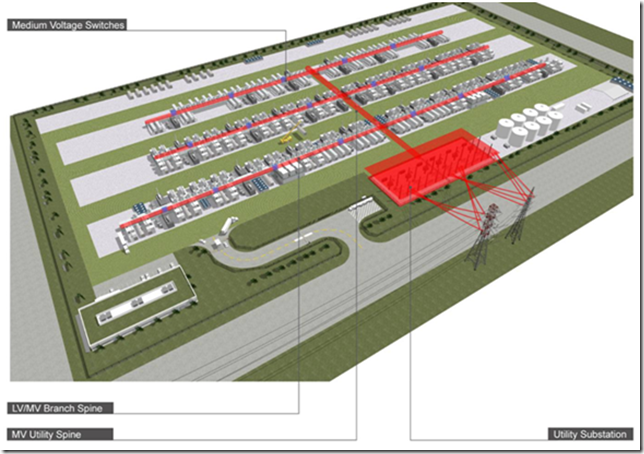

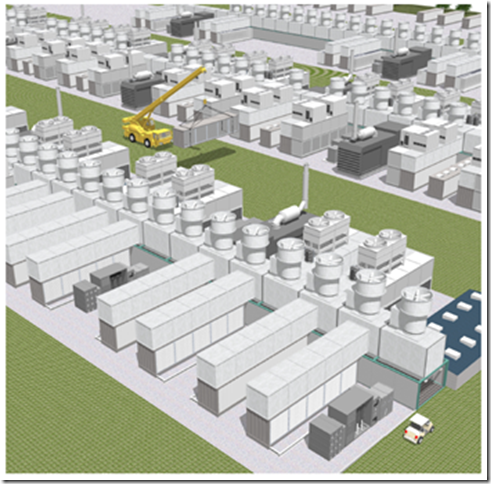

Our “Gen 4” modular data centers will take the flexibility of containerized servers—like those in our Chicago data center—and apply it across the entire facility. So what do we mean by modular? Think of it like “building blocks”, where the data center will be composed of modular units of prefabricated mechanical, electrical, security components, etc., in addition to containerized servers.

Was there a key driver for the Generation 4 Data Center?

If we were to summarize the promise of our Gen 4 design into a single sentence it would be something like this: “A highly modular, scalable, efficient, just-in-time data center capacity program that can be delivered anywhere in the world very quickly and cheaply, while allowing for continued growth as required.” Sounds too good to be true, doesn’t it? Well, keep in mind that these concepts have been in initial development and prototyping for over a year and are based on cumulative knowledge of previous facility generations and the advances we have made since we began our investments in earnest on this new design.

One of the biggest challenges we’ve had at Microsoft is something Mike likes to call the ‘Goldilock’s Problem’. In a nutshell, the problem can be stated as:

The worst thing we can do in delivering facilities for the business is not have enough capacity online, thus limiting the growth of our products and services.

The second worst thing we can do in delivering facilities for the business is to have too much capacity online.

This has led to a focus on smart, intelligent growth for the business — refining our overall demand picture. It can’t be too hot. It can’t be too cold. It has to be ‘Just Right!’ The capital dollars of investment are too large to make without long term planning. As we struggled to master these interesting challenges, we had to ensure that our technological plan also included solutions for the business and operational challenges we faced as well.

So let’s take a high level look at our Generation 4 design

Are you ready for some great visuals? Check out this video at Soapbox. Click here for the Microsoft 4th Gen Video. It’s a concept video that came out of my Data Center Research and Engineering team, under Daniel Costello, that will give you a view into what we think is the future.

From a configuration, construct-ability and time to market perspective, our primary goals and objectives are to modularize the whole data center. Not just the server side (like the Chicago facility), but the mechanical and electrical space as well. This means using the same kind of parts in pre-manufactured modules, the ability to use containers, skids, or rack-based deployments and the ability to tailor the Redundancy and Reliability requirements to the application at a very specific level.

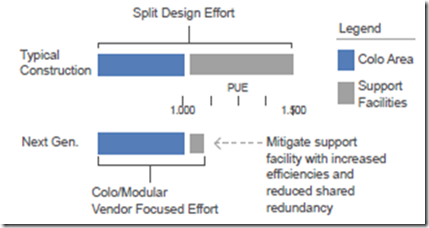

Our goals from a cost perspective were simple in concept but tough to deliver. First and foremost, we had to reduce the capital cost per critical Mega Watt by the class of use. Some applications can run with N-level redundancy in the infrastructure, others require a little more infrastructure for support. These different classes of infrastructure requirements meant that optimizing for all cost classes was paramount. At Microsoft, we are not a one trick pony and have many Online products and services (240+) that require different levels of operational support. We understand that and ensured that we addressed it in our design which will allow us to reduce capital costs by 20%-40% or greater depending upon class.

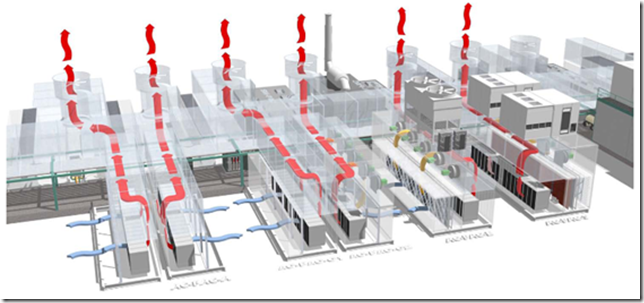

For example, non-critical or geo redundant applications have low hardware reliability requirements on a location basis. As a result, Gen 4 can be configured to provide stripped down, low-cost infrastructure with little or no redundancy and/or temperature control. Let’s say an Online service team decides that due to the dramatically lower cost, they will simply use uncontrolled outside air with temperatures ranging 10-35 C and 20-80% RH. The reality is we are already spec-ing this for all of our servers today and working with server vendors to broaden that range even further as Gen 4 becomes a reality. For this class of infrastructure, we eliminate generators, chillers, UPSs, and possibly lower costs relative to traditional infrastructure.

Applications that demand higher level of redundancy or temperature control will use configurations of Gen 4 to meet those needs, however, they will also cost more (but still less than traditional data centers). We see this cost difference driving engineering behavioral change in that we predict more applications will drive towards Geo redundancy to lower costs.

Another cool thing about Gen 4 is that it allows us to deploy capacity when our demand dictates it. Once finalized, we will no longer need to make large upfront investments. Imagine driving capital costs more closely in-line with actual demand, thus greatly reducing time-to-market and adding the capacity Online inherent in the design. Also reduced is the amount of construction labor required to put these “building blocks” together. Since the entire platform requires pre-manufacture of its core components, on-site construction costs are lowered. This allows us to maximize our return on invested capital.

In our design process, we questioned everything. You may notice there is no roof and some might be uncomfortable with this. We explored the need of one and throughout our research we got some surprising (positive) results that showed one wasn’t needed.

In short, we are striving to bring Henry Ford’s Model T factory to the data center. http://en.wikipedia.org/wiki/Henry_Ford#Model_T. Gen 4 will move data centers from a custom design and build model to a commoditized manufacturing approach. We intend to have our components built in factories and then assemble them in one location (the data center site) very quickly. Think about how a computer, car or plane is built today. Components are manufactured by different companies all over the world to a predefined spec and then integrated in one location based on demands and feature requirements. And just like Henry Ford’s assembly line drove the cost of building and the time-to-market down dramatically for the automobile industry, we expect Gen 4 to do the same for data centers. Everything will be pre-manufactured and assembled on the pad.

And did we mention that this platform will be, overall, incredibly energy efficient? From a total energy perspective not only will we have remarkable PUE values, but the total cost of energy going into the facility will be greatly reduced as well. How much energy goes into making concrete? Will we need as much of it? How much energy goes into the fuel of the construction vehicles? This will also be greatly reduced! A key driver is our goal to achieve an average PUE at or below 1.125 by 2012 across our data centers. More than that, we are on a mission to reduce the overall amount of copper and water used in these facilities. We believe these will be the next areas of industry attention when and if the energy problem is solved. So we are asking today…“how can we build a data center with less building”?

We have talked openly and publicly about building chiller-less data centers and running our facilities using aggressive outside economization. Our sincerest hope is that Gen 4 will completely eliminate the use of water. Today’s data centers use massive amounts of water and we see water as the next scarce resource and have decided to take a proactive stance on making water conservation part of our plan.

By sharing this with the industry, we believe everyone can benefit from our methodology. While this concept and approach may be intimidating (or downright frightening) to some in the industry, disclosure ultimately is better for all of us.

Gen 4 design (even more than just containers), could reduce the ‘religious’ debates in our industry. With the central spine infrastructure in place, containers or pre-manufactured server halls can be either AC or DC, air-side economized or water-side economized, or not economized at all (though the sanity of that might be questioned). Gen 4 will allow us to decommission, repair and upgrade quickly because everything is modular. No longer will we be governed by the initial decisions made when constructing the facility. We will have almost unlimited use and re-use of the facility and site. We will also be able to use power in an ultra-fluid fashion moving load from critical to non-critical as use and capacity requirements dictate.

Finally, we believe this is a big game changer. Gen 4 will provide a standard platform that our industry can innovate around. For example, all modules in our Gen 4 will have common interfaces clearly defined by our specs and any vendor that meets these specifications will be able to plug into our infrastructure. Whether you are a computer vendor, UPS vendor, generator vendor, etc., you will be able to plug and play into our infrastructure. This means we can also source anyone, anywhere on the globe to minimize costs and maximize performance. We want to help motivate the industry to further innovate—with innovations from which everyone can reap the benefits.

To summarize, the key characteristics of our Generation 4 data centers are:

- Scalable

- Plug-and-play spine infrastructure

- Factory pre-assembled: Pre-Assembled Containers (PACs) & Pre-Manufactured Buildings (PMBs)

- Rapid deployment

- De-mountable

- Reduce TTM

- Reduced construction

- Sustainable measures

- Map applications to DC Class

We hope you join us on this incredible journey of change and innovation!

Long hours of research and engineering time are invested into this process. There are still some long days and nights ahead, but the vision is clear. Rest assured however, that we as refine Generation 4, the team will soon be looking to Generation 5 (even if it is a bit farther out). There is always room to get better.

So if you happen to come across Goldilocks in the forest, and you are curious as to why she is smiling you will know that she feels very good about getting very close to ‘JUST RIGHT’.

Generations of Evolution – some background on our data center designs

We thought you might be interested in understanding what happened in the first three generations of our data center designs. When Ray Ozzie wrote his Software plus Services memo it posed a very interesting challenge to us. The winds of change were at ‘tornado’ proportions. That “plus Services” tag had some significant (and unstated) challenges inherent to it. The first was that Microsoft was going to evolve even further into an operations company. While we had been running large scale Internet services since 1995, this development lead us to an entirely new level. Additionally, these “services” would span across both Internet and Enterprise businesses. To those of you who have to operate “stuff”, you know that these are two very different worlds in operational models and challenges. It also meant that, to achieve the same level of reliability and performance required our infrastructure was going to have to scale globally and in a significant way.

It was that intense atmosphere of change that we first started re-evaluating data center technology and processes in general and our ideas began to reach farther than what was accepted by the industry at large. This was the era of Generation 1. As we look at where most of the world’s data centers are today (and where our facilities were), it represented all the known learning and design requirements that had been in place since IBM built the first purpose-built computer room. These facilities focused more around uptime, reliability and redundancy. Big infrastructure was held accountable to solve all potential environmental shortfalls. This is where the majority of infrastructure in the industry still is today.

We soon realized that traditional data centers were quickly becoming outdated. They were not keeping up with the demands of what was happening technologically and environmentally. That’s when we kicked off our Generation 2 design. Gen 2 facilities started taking into account sustainability, energy efficiency, and really looking at the total cost of energy and operations. No longer did we view data centers just for the upfront capital costs, but we took a hard look at the facility over the course of its life. Our Quincy, Washington and San Antonio, Texas facilities are examples of our Gen 2 data centers where we explored and implemented new ways to lessen the impact on the environment. These facilities are considered two leading industry examples, based on their energy efficiency and ability to run and operate at new levels of scale and performance by leveraging clean hydro power (Quincy) and recycled waste water (San Antonio) to cool the facility during peak cooling months.

As we were delivering our Gen 2 facilities into steel and concrete, our Generation 3 facilities were rapidly driving the evolution of the program. The key concepts for our Gen 3 design are increased modularity and greater concentration around energy efficiency and scale. The Gen 3 facility will be best represented by the Chicago, Illinois facility currently under construction. This facility will seem very foreign compared to the traditional data center concepts most of the industry is comfortable with. In fact, if you ever sit around in our container hanger in Chicago it will look incredibly different from a traditional raised-floor data center. We anticipate this modularization will drive huge efficiencies in terms of cost and operations for our business. We will also introduce significant changes in the environmental systems used to run our facilities. These concepts and processes (where applicable) will help us gain even greater efficiencies in our existing footprint, allowing us to further maximize infrastructure investments.

This is definitely a journey, not a destination industry. In fact, our Generation 4 design has been under heavy engineering for viability and cost for over a year. While the demand of our commercial growth required us to make investments as we grew, we treated each step in the learning as a process for further innovation in data centers. The design for our future Gen 4 facilities enabled us to make visionary advances that addressed the challenges of building, running, and operating facilities all in one concerted effort.

/Mm/Dc/Cb

I was unable to find the document from Michael Manos, can you publish the link

thanks

JT – I am not sure what you are looking for? Did the post not display?

This is an awesome effort from Microsoft and it will benefit everyone. It would have a positive impact on global warming and sounds very eco-friendly.. (reduce water consumption..).. 🙂

Great Innovation!!!

Very, very cool. I’d love to be involved in something like this!

I agree, Bryan.

Mike,

Great article, is this similar to what rackable is doing with their IceBox and Sun with their BlackBox?

Are you guys planning to build these on your own, or using Rackable / Sun?

Greg –

This is quite a bit more. We have espoused the use of containerized server and equipment for some time. In fact, our Chicago facility is specifically geared towards handling containerized IT load like the examples you mentioned above. What we are doing here is componentizing and containerizing the entire data center eco-system. This will allow us greater flexibility, greater cost control, greater efficiency and allows us to move very quickly as demand dictates.

/Mm

Mike,

Have you considered chimney system for fanless cooling. Also it appears that the container is a waist of money and materials, if you look at regular shipping nobody leaves the container with the palets. In addition the regular servers are also extremely wasteful (marketing and value perception trick for customers)

My 2c

Interesting, I didn’t know people where already using the Iceboxes.

The Chicago facility is already using them?

Greg –

We are in full container testing in Chicago. Wont tell you who we are going with though :).

/Mm

Nick –

Its all about how you optimize the ENTIRE eco-system. We have indeed looked at chimney stacks (heat does naturally move up, after all 🙂 ). Containerization has huge benefits (chimneys are just another form of containment of heat).

Im not sure I totally follow your waste statement. In this model the package that the equipment comes in, is the same package thats plugged into the facility. Its far cheaper to ship one container than it is to individually ship 2500 discrete servers. From an end-product waste perspective I dont have to get rid of the boxes and styrofoam materials any longer.

With regards to regular servers being wasteful, I guess it depends on perspective. How much is a unit of work for an individual product or service worth? Its somewhat subjective. However, if you are talking about the general efficiency of power supplies, and motherboards etc, then I can tell you that we are optimizing for the most efficient available today.

/Mm

Hey,

I didn’t know it was secret, sorry.

I was just wondering if the whole new server in a box is a fad or there is something there. I know a lot of people have been hyping it.

Could you say how many containers your using? Or are you just testing for now?

-Greg

Greg – No secret…. 🙂

Some think its a fad, I guess. But we look at them as scale units for cloud scale compute. The facility in Chicago can house between 100-200 containers depending upon configuration.

\Mm

Thank you very much! very useful information, it is useful for my work on the Internet! +1

Wonderful idea, I guess it’s gonna be useful for my work. It’s given many ideas to me!

In the US data centers it’s apparently acceptable and customary to put drinking water into a cooling device and just waste the warmed up water into the sewer?

So using a closed water system, which sounds to me like completely normal for every car wash and steel mill since the the 80s, is considered innovation here?

For the rest, interesting views, very smart thinking to get rid of the roof and save a lot of energy and be ultra-flexible.

However, the great outdoors makes physical sabotage, vandalism and pyromaniac activities a lot simpler and a lot more tempting. What about securing a site like this?

mmanos,

““A highly modular, scalable, efficient, just-in-time data center capacity program that can be delivered anywhere in the world very quickly and cheaply, while allowing for continued growth as required.” Sounds too good to be true, doesn’t it?” …. https://loosebolts.wordpress.com/2008/12/02/our-vision-for-generation-4-modular-data-centers-one-way-of-getting-it-just-right/

Sounds Similarly Parallel with http://amanfrommars.baywords.com/ai-virtual-os/

The Virtual System has an Engaging Remote Control Facility for Reality Production.

And already Shared and MBedded, Patently TransParently Message Boarded with Azure …. http://blogs.msdn.com/jennifer/archive/2008/10/28/what-is-windows-azure.aspx#comments.

Ghost Hosting for Quantum Communications Fields? A Question Mark always Suggests the Rhetorical and therefore the Probable Possibility of a Most Definitive Certainty.

Mikre,

Have you evaluated in-cabinet cooling i.e self cooled cabinets with chillers outside the datacenter…. what problems are there?

Dear Mike,

You are, or better, Microsoft is creating a turnaround in datacenterworld with the Gen 4 apporach in which I absolutely believe.

The out of the box thinking in optima forma has relusted in a out of the box datacenter which shows the maximum flexible plug and play version of a plant allowing full flexible growth and adaption of modules. Modules for data, power or cooling can be changed when ever required. Market developments can easily be folowed and modernisation, if needed, is relatively easy.

It must have been great fun to develop this approach because it looks as simple as LEGO-blocks put together with great fantasy. Although I can imagine there are still quite some questions to be answered I am sure this type of plants will be connecting the cloudy world of future computing througout the world within the near future.

As partnering in such developments seems essential I expect you might have had some difficulties to select people to join your thinking sessions. In case of doubt and in case you consider The Netherlands as one of your favourites for one of your gen 4 centres please don’t hesitate to contact me. Out of the box thinking is like a second nature and your ideas, your plans proves that such ideas can only survive when the team employed has believe and guts.

Thanks for this eye-opener and Microsoft … Congratulations!!

Regards,

Frank A.M.

Hello Mike,

Great article! We have a product that you’ve got to evaluate for your container based data centers. It further condenses the amount of computing power a single container can hold. The product is called Chameleon and is the highest density fiber patching system on the market. I’m sure you’re the first at Microsoft to learn of its existence as we just launched it.

Current fiber patching systems can patch a maximum of 288 fibers per 4U chassis. Our product can patch 1728 in the same space, a six fold increase in density and a six fold reduction in space requirements. With our patching system I wonder how many additional servers could be placed in a container? Could your inventory of containers be reduced as the density increases? How much money might this save? Your thoughts?

Regards,

Evan D. Owen

Thank you Microsoft for taking a leadership role in the off-site construction industry. I have had a vision of what off site fabrication could mean for the US Construction Industry. After watching Europe continue to demonstrate advancements in modular construction while the US was slow to accept this methodology. Building faster, smaller, greener and more cost effectively, offsite is the way to go.

http://pcxcorp.com/Westfield/Roseville.htm

The link shows but one example of what is possible offsite if you create a vision. Westfield accelerated the construction schedule while reducing total costs.

PCX would love to partner on any of these projects!

Dean Di Lillo

VP of Marketing and Business Development

Source for IT Benchmark Data

If you are looking for a source of freely available benchmark data, some of it related to data centers, check out:

http://itbenchmark.wordpress.com

That the power of cloud computing!!!

Microsoft is thinking big an will pay off in the long run.

Mike:

Will your data center in West Des Moines, Iowa be generation 4?

Thanks – Jeff

Mike,

I like the idea of a repeatable system and I LOVE the detailed explanations you have provided. There is no doubt you are a visionary and will be looked up to for your drive and conviction to put this out. Well done!

What are your thoughts on making this repeatable around the world?

Containers are large…may not fit on narrow roads in developing countries. Roads are even narrower when you’re heading for the wilderness 🙂

Will MSFT build the infrastructure around the site? Work with governments abroad to improve infrastructure? Or just work in countries where infrastructure meets their needs?

Are you looking at the “accordion version” of a container that can shrink for transport like some RVs do?

Also, have any renewable energy sources been considered? What types?

But you see sometimes the technology out-paces our capacity to take advantage of it fully. So things slow down a little bit until the new paradigm has time to become the ‘ordinary’.

Bryan

Mario – You would need to work with the folks at Microsoft to get permisison as they are the actual owners of such graphics. At the time of the posting, I was given permission to post on my private blog.

\Mm

thanks. do you know whom i can contact for that?

thanks,

mario

Mario,

I have sent you contact information via e-mail.

Cheers!

Mike